|

OpenANN

1.1.0

An open source library for artificial neural networks.

|

|

OpenANN

1.1.0

An open source library for artificial neural networks.

|

Namespaces | |

| FANN | |

| LibSVM | |

Classes | |

| class | AdaBoost |

| Adaptive Boosting. More... | |

| class | Bagging |

| Bootstrap Aggregating. More... | |

| class | CompressionMatrixFactory |

| Creates several types of matrices for compression. More... | |

| class | Compressor |

| Compresses arbitrary one-dimensional data. More... | |

| class | EnsembleLearner |

| class | Evaluator |

| Evaluates a Learner. More... | |

| class | MulticlassEvaluator |

| Evaluates learners for multiclass problems. More... | |

| class | IntrinsicPlasticity |

| Learns the parameters of a logistic sigmoid activation function. More... | |

| class | DataSet |

| Data set interface. More... | |

| class | DataSetView |

| An index-based dataset wrapper for representing efficient dataset views on any DataSet instance. More... | |

| class | DataStream |

| Streams training data for online training. More... | |

| class | DirectStorageDataSet |

| Stores the inputs and outputs of the data set directly in two matrices. More... | |

| struct | FloatingPointFormatter |

| Wraps a value and its precision for logging. More... | |

| class | Log |

| Global logger. More... | |

| class | Logger |

| A local logger that can redirect messages to several targets. More... | |

| class | WeightedDataSet |

| Resampled dataset based on the original dataset. More... | |

| class | KMeans |

| K-means clustering. More... | |

| class | AlphaBetaFilter |

| A recurrent layer that can be used to smooth the input and estimate its derivative. More... | |

| class | Compressed |

| Fully connected layer with compressed weights. More... | |

| class | Convolutional |

| Applies a learnable filter on a 2D or 3D input. More... | |

| class | Dropout |

| Dropout mask. More... | |

| class | Extreme |

| Fully connected layer with fixed random weights. More... | |

| class | FullyConnected |

| Fully connected layer. More... | |

| class | Input |

| Input layer. More... | |

| class | OutputInfo |

| Provides information about the output of a layer. More... | |

| class | Layer |

| Interface that has to be implemented by all layers of a neural network that can be trained with backpropagation. More... | |

| class | LocalResponseNormalization |

| Local response normalization. More... | |

| class | MaxPooling |

| Performs max-pooling on 2D input feature maps. More... | |

| class | SigmaPi |

| Fully connected higher-order layer. More... | |

| struct | DistanceConstraint |

| Common constraint for encoding translation invariances into a SigmaPi layer. More... | |

| struct | SlopeConstraint |

| Common constraint for encoding translation and scale invariances into a SigmaPi layer. More... | |

| struct | TriangleConstraint |

| Common constraint for encoding translation, scale and rotation invariance into a SigmaPi layer. More... | |

| class | Subsampling |

| Performs average pooling on 2D input feature maps. More... | |

| class | Learner |

| Common base class of all learning algorithms. More... | |

| class | Net |

| Feedforward multilayer neural network. More... | |

| class | Normalization |

| Normalize data so that for each feature the mean is 0 and the standard deviation is 1. More... | |

| class | OpenANNLibraryInfo |

| Provides information about the OpenANN library. More... | |

| class | CG |

| Conjugate Gradient. More... | |

| class | IPOPCMAES |

| Evolution Strategies with Covariance Matrix Adaption and a restart strategy that increases the population size (IPOP-CMA-ES). More... | |

| class | LBFGS |

| Limited storage Broyden-Fletcher-Goldfarb-Shanno. More... | |

| class | LMA |

| Levenberg-Marquardt Algorithm. More... | |

| class | MBSGD |

| Mini-batch stochastic gradient descent. More... | |

| class | Optimizable |

| Represents an optimizable object. More... | |

| class | Optimizer |

| The common interface of all optimization algorithms. More... | |

| class | StoppingCriteria |

| Stopping criteria for optimization algorithms. More... | |

| class | StoppingInterrupt |

| A system-independent interface for checking interrupts that can signals the end of the optimization process externally. More... | |

| class | PCA |

| Principal component analysis. More... | |

| class | RBM |

| Restricted Boltzmann Machine. More... | |

| class | Regularization |

| Holds all information related to regularization terms in an error function. More... | |

| class | ActionSpace |

Represents the action space  in a reinforcement learning problem. More... in a reinforcement learning problem. More... | |

| class | Agent |

| A (learning) agent in a reinforcement learning problem. More... | |

| class | Environment |

| A reinforcement learning environment. More... | |

| class | RandomAgent |

| Choses actions randomly. More... | |

| class | StateSpace |

Represents the state space  in a reinforcement learning problem. More... in a reinforcement learning problem. More... | |

| class | SparseAutoEncoder |

| A sparse auto-encoder tries to reconstruct the inputs from a compressed representation. More... | |

| class | Transformer |

| Common base for all transformations. More... | |

| class | OpenANNException |

| This exception is thrown for all logical errors that occur in OpenANN API calls that are not time critical. More... | |

| class | RandomNumberGenerator |

| A utility class that simplifies the generation of random numbers. More... | |

| class | ZCAWhitening |

| Zero phase component analysis whitening transformation. More... | |

Enumerations | |

| enum | ActivationFunction { LOGISTIC = 0, TANH = 1, TANH_SCALED = 2, RECTIFIER = 3, LINEAR = 4, SOFTMAX = 4 } |

| enum | ErrorFunction { NO_E_DEFINED, MSE, CE } |

| Error function that will be minimized. More... | |

Functions | |

| void | activationFunction (ActivationFunction act, const Eigen::MatrixXd &a, Eigen::MatrixXd &z) |

| void | activationFunctionDerivative (ActivationFunction act, const Eigen::MatrixXd &z, Eigen::MatrixXd &gd) |

| void | softmax (Eigen::MatrixXd &y) |

| void | logistic (const Eigen::MatrixXd &a, Eigen::MatrixXd &z) |

| void | logisticDerivative (const Eigen::MatrixXd &z, Eigen::MatrixXd &gd) |

| void | normaltanh (const Eigen::MatrixXd &a, Eigen::MatrixXd &z) |

| void | normaltanhDerivative (const Eigen::MatrixXd &z, Eigen::MatrixXd &gd) |

| void | scaledtanh (const Eigen::MatrixXd &a, Eigen::MatrixXd &z) |

| void | scaledtanhDerivative (const Eigen::MatrixXd &z, Eigen::MatrixXd &gd) |

| void | rectifier (const Eigen::MatrixXd &a, Eigen::MatrixXd &z) |

| void | rectifierDerivative (const Eigen::MatrixXd &z, Eigen::MatrixXd &gd) |

| void | linear (const Eigen::MatrixXd &a, Eigen::MatrixXd &z) |

| void | linearDerivative (Eigen::MatrixXd &gd) |

| void | train (Net &net, std::string algorithm, ErrorFunction errorFunction, const StoppingCriteria &stop, bool reinitialize=false, bool dropout=false) |

| Train a feedforward neural network supervised. More... | |

| void | makeMLNN (Net &net, ActivationFunction g, ActivationFunction h, int D, int F, int H,...) |

| Create a multilayer neural network. More... | |

| template<typename Derived1 , typename Derived2 > | |

| double | crossEntropy (const Eigen::MatrixBase< Derived1 > &Y, const Eigen::MatrixBase< Derived2 > &T) |

| Compute mean cross entropy. More... | |

| template<typename Derived > | |

| double | meanSquaredError (const Eigen::MatrixBase< Derived > &YmT) |

| Compute mean squared error. More... | |

| double | sse (Learner &learner, DataSet &dataSet) |

| Sum of squared errors. More... | |

| double | mse (Learner &learner, DataSet &dataSet) |

| Mean squared error. More... | |

| double | rmse (Learner &learner, DataSet &dataSet) |

| Root mean squared error. More... | |

| double | ce (Learner &learner, DataSet &dataSet) |

| Cross entropy. More... | |

| double | accuracy (Learner &learner, DataSet &dataSet) |

| Accuracy. More... | |

| double | weightedAccuracy (Learner &learner, DataSet &dataSet, Eigen::VectorXd weights) |

| Accuracy on weighted data set. More... | |

| Eigen::MatrixXi | confusionMatrix (Learner &learner, DataSet &dataSet) |

| Confusion matrix. More... | |

| int | classificationHits (Learner &learner, DataSet &dataSet) |

| Classification hits. More... | |

| double | crossValidation (int folds, Learner &learner, DataSet &dataSet, Optimizer &opt) |

| Cross-validation. More... | |

| int | oneOfCDecoding (const Eigen::VectorXd &target) |

| One-of-c decoding. More... | |

| void | split (std::vector< DataSetView > &groups, DataSet &dataset, int numberOfGroups, bool shuffling=true) |

| Split the current DataSet into a specific number of DataSetView groups. More... | |

| void | split (std::vector< DataSetView > &groups, DataSet &dataset, double ratio=0.5, bool shuffling=true) |

| Split the current DataSet into two DataSetViews where the number of containing instances are controlled by a ratio flag. More... | |

| void | merge (DataSetView &merging, std::vector< DataSetView > &groups) |

| Merge all viewing instances from a DataSetView into another existing one. More... | |

| DataSetView | sample (DataSet &dataSet, double fraction, bool replacement) |

| Sample random instances from the original data set. More... | |

| std::ostream & | operator<< (std::ostream &os, const FloatingPointFormatter &t) |

| Logger & | operator<< (Logger &logger, const FloatingPointFormatter &t) |

| template<typename T > | |

| Logger & | operator<< (Logger &logger, const T &t) |

| void | useAllCores () |

| Enable all cores for OpenMP. More... | |

| void | scaleData (Eigen::MatrixXd &data, double min=-1.0, double max=1.0) |

| Scale all values to the interval [min, max]. More... | |

| void | filter (const Eigen::MatrixXd &x, Eigen::MatrixXd &y, const Eigen::MatrixXd &b, const Eigen::MatrixXd &a) |

| Apply a (numerically stable) filter (FIR or IIR) on the input signal. More... | |

| void | downsample (const Eigen::MatrixXd &y, Eigen::MatrixXd &d, int downSamplingFactor) |

| Downsample an input signal. More... | |

| Eigen::MatrixXd | sampleRandomPatches (const Eigen::MatrixXd &images, int channels, int rows, int cols, int samples, int patchRows, int patchCols) |

| Extract random patches from a images. More... | |

| template<class T > | |

| bool | equals (T a, T b, T delta) |

| template<class T > | |

| bool | isNaN (T value) |

| template<class T > | |

| bool | isInf (T value) |

| double OpenANN::accuracy | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

Accuracy.

The percentage of correct predictions in a classification problem.

| learner | learned model |

| dataSet | dataset |

| void OpenANN::activationFunction | ( | ActivationFunction | act, |

| const Eigen::MatrixXd & | a, | ||

| Eigen::MatrixXd & | z | ||

| ) |

| void OpenANN::activationFunctionDerivative | ( | ActivationFunction | act, |

| const Eigen::MatrixXd & | z, | ||

| Eigen::MatrixXd & | gd | ||

| ) |

| double OpenANN::ce | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

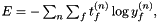

Cross entropy.

This error function is usually used for classification problems.

where

where  represents the actual probability

represents the actual probability  and

and  is the prediction of the learner.

is the prediction of the learner.

| learner | learned model |

| dataSet | dataset |

| int OpenANN::classificationHits | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

Classification hits.

| learner | learned model |

| dataSet | dataset; the targets are assumed to be encoded with 1-of-c encoding if there are 2 or more components, otherwise the output is assumed to be within [0, 1], where values of 0.5 or greater and all other values belong to different classes |

| Eigen::MatrixXi OpenANN::confusionMatrix | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

Confusion matrix.

Requires one-of-c encoded labels. The matrix row will denote the actual label and the matrix column will denote the predicted label of the learner.

| learner | learned model |

| dataSet | dataset |

| double OpenANN::crossEntropy | ( | const Eigen::MatrixBase< Derived1 > & | Y, |

| const Eigen::MatrixBase< Derived2 > & | T | ||

| ) |

Compute mean cross entropy.

| Derived1 | matrix type |

| Derived2 | matrix type |

| Y | each row contains a prediction |

| T | each row contains a target |

| double OpenANN::crossValidation | ( | int | folds, |

| Learner & | learner, | ||

| DataSet & | dataSet, | ||

| Optimizer & | opt | ||

| ) |

Cross-validation.

| folds | number of cross-validation folds |

| learner | learner |

| dataSet | dataset |

| opt | optimization algorithm |

| void OpenANN::downsample | ( | const Eigen::MatrixXd & | y, |

| Eigen::MatrixXd & | d, | ||

| int | downSamplingFactor | ||

| ) |

Downsample an input signal.

| y | input signal |

| d | downsampled signal |

| downSamplingFactor | downsampling factor |

| bool OpenANN::equals | ( | T | a, |

| T | b, | ||

| T | delta | ||

| ) |

| void OpenANN::filter | ( | const Eigen::MatrixXd & | x, |

| Eigen::MatrixXd & | y, | ||

| const Eigen::MatrixXd & | b, | ||

| const Eigen::MatrixXd & | a | ||

| ) |

Apply a (numerically stable) filter (FIR or IIR) on the input signal.

| x | input signal |

| y | output, filtered signal |

| b | feedforward filter coefficients |

| a | feedback filter coefficients |

| bool OpenANN::isInf | ( | T | value) |

| bool OpenANN::isNaN | ( | T | value) |

| void OpenANN::linear | ( | const Eigen::MatrixXd & | a, |

| Eigen::MatrixXd & | z | ||

| ) |

| void OpenANN::linearDerivative | ( | Eigen::MatrixXd & | gd) |

| void OpenANN::logistic | ( | const Eigen::MatrixXd & | a, |

| Eigen::MatrixXd & | z | ||

| ) |

| void OpenANN::logisticDerivative | ( | const Eigen::MatrixXd & | z, |

| Eigen::MatrixXd & | gd | ||

| ) |

| void OpenANN::makeMLNN | ( | Net & | net, |

| ActivationFunction | g, | ||

| ActivationFunction | h, | ||

| int | D, | ||

| int | F, | ||

| int | H, | ||

| ... | |||

| ) |

Create a multilayer neural network.

| net | neural network |

| g | activation function in hidden layers |

| h | activation function in output layer |

| D | number of inputs |

| F | number of outputs |

| H | number of hidden layers |

| ... | numbers of hidden units |

| double OpenANN::meanSquaredError | ( | const Eigen::MatrixBase< Derived > & | YmT) |

Compute mean squared error.

| Derived | matrix type |

| YmT | each row contains the difference of a prediction and a target |

| void OpenANN::merge | ( | DataSetView & | merging, |

| std::vector< DataSetView > & | groups | ||

| ) |

Merge all viewing instances from a DataSetView into another existing one.

Friend declaration for direct access to indices.

| merging | the destination DataSetView that will contain later all instances from the group |

| groups | number of DataSetView that should be merged into the destination. |

| double OpenANN::mse | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

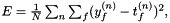

Mean squared error.

This error function is usually used for regression problems.

where

where  is the predicted output,

is the predicted output,  the desired output and

the desired output and  is size of the dataset.

is size of the dataset.

| learner | learned model |

| dataSet | dataset |

| void OpenANN::normaltanh | ( | const Eigen::MatrixXd & | a, |

| Eigen::MatrixXd & | z | ||

| ) |

| void OpenANN::normaltanhDerivative | ( | const Eigen::MatrixXd & | z, |

| Eigen::MatrixXd & | gd | ||

| ) |

| int OpenANN::oneOfCDecoding | ( | const Eigen::VectorXd & | target) |

One-of-c decoding.

If the length of the vector is 1 it is interpreted as probability for the class 1.

| target | vector that represents a 1-of-c encoded class label |

| std::ostream& OpenANN::operator<< | ( | std::ostream & | os, |

| const FloatingPointFormatter & | t | ||

| ) |

| Logger& OpenANN::operator<< | ( | Logger & | logger, |

| const FloatingPointFormatter & | t | ||

| ) |

| Logger& OpenANN::operator<< | ( | Logger & | logger, |

| const T & | t | ||

| ) |

| void OpenANN::rectifier | ( | const Eigen::MatrixXd & | a, |

| Eigen::MatrixXd & | z | ||

| ) |

| void OpenANN::rectifierDerivative | ( | const Eigen::MatrixXd & | z, |

| Eigen::MatrixXd & | gd | ||

| ) |

| double OpenANN::rmse | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

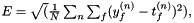

Root mean squared error.

This error function is usually used for regression problems.

where

where  is the predicted output,

is the predicted output,  the desired output and

the desired output and  is size of the dataset.

is size of the dataset.

| learner | learned model |

| dataSet | dataset |

| DataSetView OpenANN::sample | ( | DataSet & | dataSet, |

| double | fraction, | ||

| bool | replacement | ||

| ) |

Sample random instances from the original data set.

| dataSet | original data set |

| fraction | fraction of the original data set's size, must be within (0, 1) |

| replacement | draw with or without replacement |

| Eigen::MatrixXd OpenANN::sampleRandomPatches | ( | const Eigen::MatrixXd & | images, |

| int | channels, | ||

| int | rows, | ||

| int | cols, | ||

| int | samples, | ||

| int | patchRows, | ||

| int | patchCols | ||

| ) |

Extract random patches from a images.

| images | each row contains an original image |

| channels | number of color channels, e.g. 3 for RGB, 1 for grayscale |

| rows | height of the images |

| cols | width of the images |

| samples | number of sampled patches for each image |

| patchRows | height of image patches |

| patchCols | width of image patches |

| void OpenANN::scaleData | ( | Eigen::MatrixXd & | data, |

| double | min = -1.0, |

||

| double | max = 1.0 |

||

| ) |

Scale all values to the interval [min, max].

| data | matrix that contains e. g. network inputs or outputs |

| min | minimum value of the output |

| max | maximum value of the output |

| void OpenANN::scaledtanh | ( | const Eigen::MatrixXd & | a, |

| Eigen::MatrixXd & | z | ||

| ) |

| void OpenANN::scaledtanhDerivative | ( | const Eigen::MatrixXd & | z, |

| Eigen::MatrixXd & | gd | ||

| ) |

| void OpenANN::softmax | ( | Eigen::MatrixXd & | y) |

| void OpenANN::split | ( | std::vector< DataSetView > & | groups, |

| DataSet & | dataset, | ||

| int | numberOfGroups, | ||

| bool | shuffling = true |

||

| ) |

Split the current DataSet into a specific number of DataSetView groups.

| groups | std::vector reference that will be filled with new dataset views. |

| dataset | reference for internal instances of each dataset view |

| numberOfGroups | the number of DataSetView generated by this call |

| shuffling | if so, the split is called on a shuffled version of the reference dataset |

| void OpenANN::split | ( | std::vector< DataSetView > & | groups, |

| DataSet & | dataset, | ||

| double | ratio = 0.5, |

||

| bool | shuffling = true |

||

| ) |

Split the current DataSet into two DataSetViews where the number of containing instances are controlled by a ratio flag.

| groups | std::vector reference that will be filled with two dataset views. |

| dataset | reference for internal instances of each dataset view |

| ratio | sets the ratio of the sample count between the two sets

|

| shuffling | if so, the split is called on a shuffled version of the reference dataset |

| double OpenANN::sse | ( | Learner & | learner, |

| DataSet & | dataSet | ||

| ) |

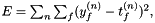

Sum of squared errors.

This error function is usually used for regression problems.

where

where  is the predicted output and

is the predicted output and  the desired output.

the desired output.

| learner | learned model |

| dataSet | dataset |

| void OpenANN::train | ( | Net & | net, |

| std::string | algorithm, | ||

| ErrorFunction | errorFunction, | ||

| const StoppingCriteria & | stop, | ||

| bool | reinitialize = false, |

||

| bool | dropout = false |

||

| ) |

Train a feedforward neural network supervised.

| net | neural network |

| algorithm | a registered algorithm, e.g. "MBSGD", "LMA", "CG", "LBFGS" or "CMAES" |

| errorFunction | error function to optimize |

| stop | stopping criteria |

| reinitialize | should the weights be initialized before optimization? |

| dropout | use dropout for regularization |

| void OpenANN::useAllCores | ( | ) |

Enable all cores for OpenMP.

The number of available cores must be specified during the build of OpenANN. It can be set with the CMake variable PARALLEL_CORES. Note that you should use only the maximum number of available physical cores. Virtual cores will usually slow matrix operations down.

| double OpenANN::weightedAccuracy | ( | Learner & | learner, |

| DataSet & | dataSet, | ||

| Eigen::VectorXd | weights | ||

| ) |

Accuracy on weighted data set.

| learner | learned model |

| dataSet | dataset |

| weights | weights for each instance, must sum up to one |

1.8.4

1.8.4