|

OpenANN

1.1.0

An open source library for artificial neural networks.

|

|

OpenANN

1.1.0

An open source library for artificial neural networks.

|

We will solve a very simple problem here to demonstrate the API of OpenANN.

The XOR problem cannot be solved by the perceptron (a neural network with just one neuron) and was the reason for the death of neural network research in the 70s until backpropagation was discovered.

The data set is simple:

|  |  |

| 0 | 1 | 1 |

| 0 | 0 | 0 |

| 1 | 1 | 0 |

| 1 | 0 | 1 |

That means  is on whenever

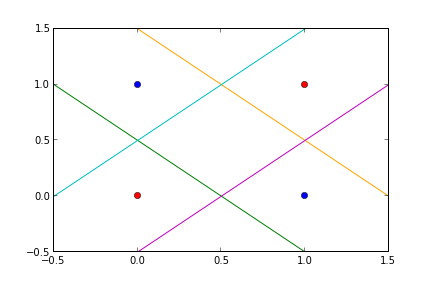

is on whenever  . The problem is that you cannot draw a line that separates the two classes 0 and 1. They are not linearly separable as you can see in the following picture. Therefore, we need at least one hidden layer to solve the problem. In the next sections you will find C++ code and Python code that solves this problem.

. The problem is that you cannot draw a line that separates the two classes 0 and 1. They are not linearly separable as you can see in the following picture. Therefore, we need at least one hidden layer to solve the problem. In the next sections you will find C++ code and Python code that solves this problem.

Compile it with pkg-config and g++ (and really make sure that pkg-config is installed otherwise you might got misleading errors):

g++ main.cpp -o openann `pkg-config --cflags --libs openann`

Classification

Regression

Reinforcement Learning

We also have some Benchmarks that show how you can use ANNs and compare different architectures.

1.8.4

1.8.4